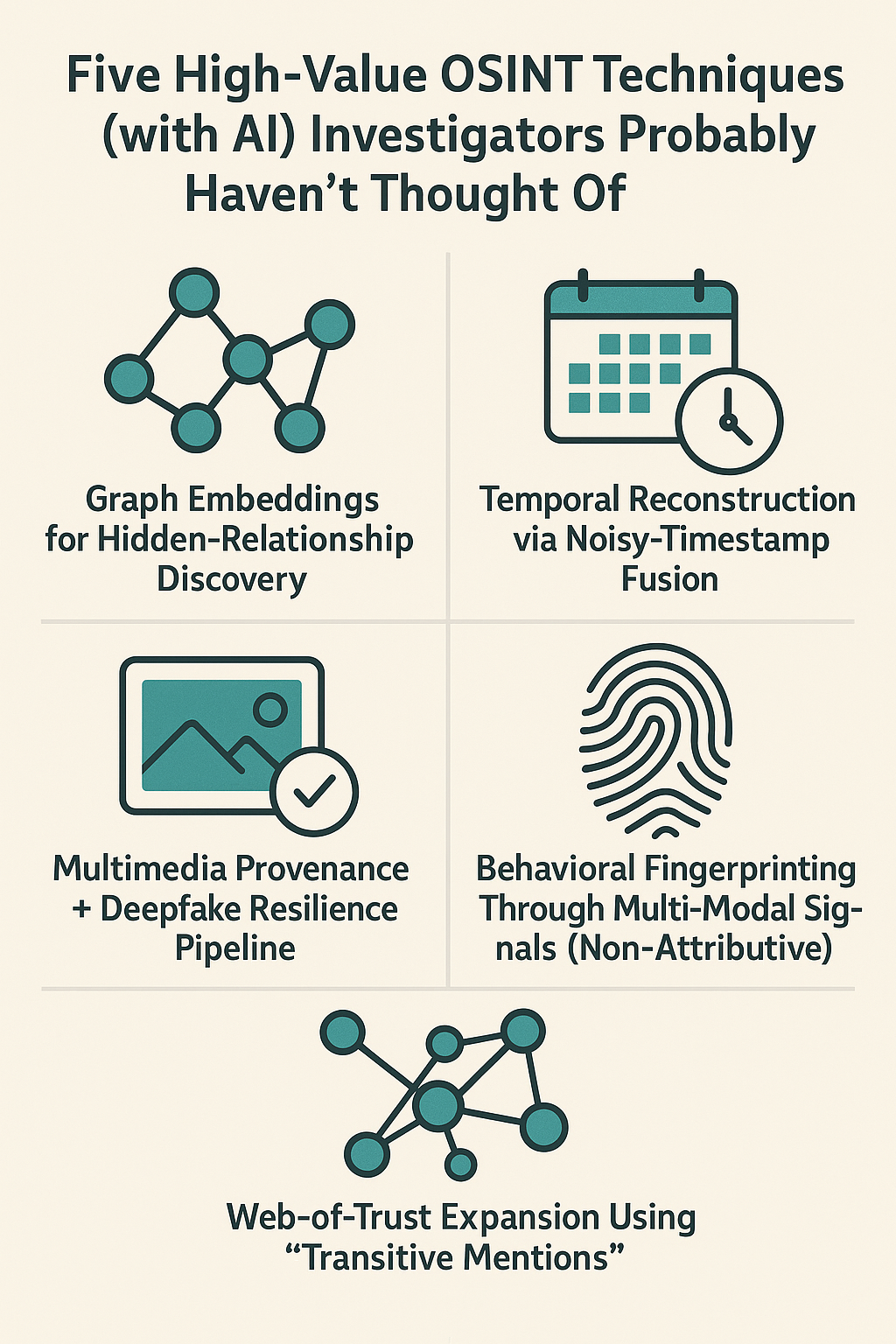

Clear, precise, and legally defensible OSINT is about making the invisible visible — not about breaking rules. Below are five advanced, high-impact techniques that combine traditional open-source methods with modern AI to surface relationships, provenance, and intent that are often missed. For each technique I provide what it does, why it matters, a compact, lawful workflow, and guardrails to keep your work defensible.

1) Graph embeddings for hidden-relationship discovery

What it does: Turn disparate mentions (social profiles, forum posts, news stories, domain WHOIS records, PDFs) into a unified vector space so you can find non-obvious associations by similarity rather than exact matches.

Why it matters: People and organizations leave fractured traces. Exact matching misses the soft signals — shared wording, similar phrasing, reused boilerplate, near-identical image crops, subtle hostname reuse. Embeddings let you find those near-matches at scale.

Workflow (high level):

- Ingest sources (HTML, PDFs, images’ OCR, metadata) into a canonical document store.

- Use an embedding model to vectorize each document or entity record (sentence/paragraph level + metadata).

- Build a nearest-neighbor index (approximate NN for performance) and run similarity queries from any seed (email, photo, phrase).

- Visualize top hits as a graph and prioritize by cross-source corroboration (e.g., same IP + phrase reuse + shared payment processor).

- Investigate top clusters manually and capture provenance.

AI role: Embeddings + clustering reveal semantic similarity; LLMs help summarize clusters into hypotheses and produce follow-up searches.

Guardrails: Don’t use embeddings as “proof.” Treat them as hypothesis generators and always back up with primary sources and timestamps.

Certified in Open Source Intelligence (C|OSINT)

Advance Your Career with a Globally Recognized OSINT Certification

2) Temporal reconstruction via noisy-timestamp fusion

What it does: Rebuild a subject’s activity timeline by fusing imperfect timestamps (EXIF, webcache, social post times, archive capture times) and using AI to reconcile contradictions and flag likely edits, reposts, or fabrications.

Why it matters: Many investigations hinge on sequence — who posted what when. Single timestamps are easily manipulated; combining signals gives a resilient timeline.

Workflow (high level):

- Collect every timestamp candidate for an item (file metadata, CMS timestamps, CDN headers, Wayback captures, quoted timestamps within text).

- Normalize to UTC and label by trust level (e.g., server log > user-submitted timestamp).

- Use a probabilistic model or LLM prompt to weigh sources and propose a best-estimate timeline with confidence bands.

- Highlight anomalies (e.g., content claiming 2018 but first captured in 2024 + EXIF mismatch).

- Seek corroboration (third-party capture, independent witnesses).

AI role: LLMs and lightweight probabilistic models help weigh competing timestamps and produce an explainable summary of why one ordering is likelier.

Guardrails: Preserve and archive all raw timestamps and reasoning. Never alter original evidence timestamps in your report.

3) Multimedia provenance + deepfake resilience pipeline

What it does: Combine classical forensic checks (EXIF, compression artifacts, sensor noise) with AI-based provenance models to rapidly triage images and video for authenticity and origin signals.

Why it matters: Visual media is both powerful and fragile. Automated provenance scoring lets you focus human expertise where it matters.

Workflow (high level):

- Extract all embedded metadata, container headers, and frame-level stats.

- Run photo/video forensic routines: error level analysis, sensor pattern noise, recompression traces.

- Apply an AI provenance model to classify likelihood of manipulation and suggest probable generation tool or editing step.

- Cross-reference with reverse image search (multi-engine), platform upload histories, and archival services to establish earliest capture.

- Produce an evidence-grade provenance report and recommend whether lab forensic analysis is needed.

AI role: Classifier models detect artifacts of generative tools and can predict the editing steps that produced an artefact, helping prioritize deeper review.

Guardrails: AI outputs are probabilistic. Use them to prioritize lab testing or subpoenas — not as sole conclusions.

4) Behavioral fingerprinting through multi-modal signals (non-attributive)

What it does: Build anonymized, non-identifying behavioral profiles from posting cadence, timezone signals, device fingerprint proxies (UA strings, recurring formatting quirks), and writing style to cluster likely accounts belonging to the same operator.

Why it matters: When direct identifiers are absent or obfuscated, behavior often leaks identity. Clustering behaviorally similar accounts lets investigators escalate where appropriate.

Workflow (high level):

- Collect non-PII signals only (posting patterns, timezone offsets inferred from activity, device/user agent patterns, repeated typos or phrasing).

- Vectorize features (statistical, n-gram, timing) and cluster.

- Use LLMs for stylometric summarization (e.g., “consistently uses serial comma + British spellings + 11PM UTC activity”).

- Flag clusters with high cohesion for deeper legal review and next steps (subpoena, partnership with platform).

- If escalation is authorized, use legal process or platform cooperation to obtain identity.

AI role: LLMs for stylometric summarization; clustering algorithms + embedding models for grouping.

Guardrails: Avoid identifying a person solely on behavioral clustering. This is an investigative lead — not proof. Respect privacy laws and platform TOS.

5) Web-of-trust expansion using “transitive mentions”

What it does: Instead of looking for direct links (follows, friends, backlinks), map transitive mentions — when A quotes B quoting C, or a comment thread cites a handle used elsewhere — and use graph ML to infer trust/endorsement networks and hidden amplification chains.

Why it matters: Malicious influence or coordinated campaigns often operate via multi-step amplification. Tracing transitive mentions exposes the chain rather than just endpoints.

Workflow (high level):

- Harvest conversational threads across platforms (forums, comment threads, quoted tweets, Telegram channels).

- Extract quote/mention relationships and normalize references (handles, URLs, screenshots).

- Build a directed graph where edges are “A referenced B at time T.”

- Use community detection and edge-weighting (by engagement, repeated cross-platform occurrences) to surface likely originators versus amplifiers.

- Use AI to synthesize narrative: “Source cluster X produced content that passed through amplifiers Y and Z before mainstream pickup.”

AI role: LLMs help resolve fuzzy references (e.g., identifying when a screenshot references a now-deleted handle) and generate human readable chain summaries.

Guardrails: Be explicit about confidence and provenance when presenting chain in court or to partners. Cross-verify with platform logs when possible.

Practical AI toolkit (ethical, pragmatic)

- Embeddings: Use open or commercial sentence/image embeddings to cluster semantic similarity. Keep vector indexes auditable and retain raw source links.

- LLMs: Use them for summarization, hypothesis generation, and timestamp reconciliation — but store prompts, responses, and source links for evidentiary trails.

- Image/video models: Use provenance/detection models as triage; preserve originals and create hash-based chain-of-custody.

- Graph ML: Lightweight community detection (Louvain, Leiden) + edge weighting works best; AI can help label clusters.

- Automation: Build repeatable pipelines that log input, model version, and outputs. Model drift matters — note model versions in reports.

Ethics, legality, and evidentiary hygiene (non-negotiable)

- Authority & consent: Only escalate to intrusive techniques (subpoena, platform legal requests, account de-anonymization) with legal authority. Know the jurisdictional limits.

- Minimize data collection: Collect only what you need; avoid harvesting sensitive personal data unless necessary and authorized.

- Audit trail: Log raw captures, timestamps, tool versions, and intermediate AI outputs. Save hashes of evidentiary files.

- Transparency in reporting: Distinguish facts, model outputs, hypotheses, and uncertainty. Never present AI inferences as incontrovertible facts.

- Integrity: Avoid techniques that require or encourage hacking, credential stuffing, or deception that would break laws or platform TOS.

Quick example (non-sensitive)

Imagine you’re investigating a small coordinated misinformation campaign about a local charity:

- Seed with a debunked post. Use reverse image search + provenance pipeline to check the image/video.

- Run embeddings over all mentions (social posts, forum comments, article paragraphs) to find near-duplicate phrasing — you discover three forum posts that reuse the same unusual phrase.

- Build a mention graph and use transitive mention mapping — you see one obscure channel repeatedly amplifies the phrase across platforms.

- Apply behavioral fingerprinting to cluster accounts that repost on a consistent nightly cadence in UTC+2; that cluster becomes your investigative lead.

- Archive everything, document the AI outputs and confidence, then coordinate with platform moderation or legal channels if needed.

Certified in Open Source Intelligence (C|OSINT)

Advance Your Career with a Globally Recognized OSINT Certification. Self-paced, online certification designed to help you become certified in Open Source Intelligence

Final note

These five techniques emphasize triangulation — combining signals, not substituting human judgment with models. Use AI to scale hypothesis generation and triage; use human investigators to confirm, corroborate, and act within law. If you want, I’ll draft a one-page SOP for any single technique above (inputs, minimal toolset, required logs, and templated report language) so your team can implement it defensibly. Which technique should I turn into a field SOP first?

Member discussion